INTRODUCTION

Fractures of the distal end of the radius are defined as those that occur up to three

centimeters from the radiocarpal articulation1. It has an incidence of approximately 1: 10,000 people, representing 16% of all fractures

of the human body2. The most affected age group is between 60 and 69 years, mainly women, but there

is an increase in the incidence among young people due to traffic accidents and high-energy

sports injuries1-3. The high incidence in the elderly is correlated with osteoporosis, female sex, white

race, and early menopause1-3.

The diagnosis of radio fractures is based on medical history, physical examination

and image evaluation, generally obtained with plain radiographs of the wrist in the

anteroposterior (AP) and lateral view1-3. Fractures at the distal end of the radius are divided according to the pattern of

the injury. Therefore, classifications are important insofar as they help to make

decisions about treatment to institute and guide the prognosis of fractures4.

The Frykman classification was, for many years, the most widely used system, and is

based on the participation of the articular surfaces of the radius, which can be classified

from 1 to 85. The Universal or Rayhack classification was created in 1990 and modified by Cooney

in 19936. It differences between intra and extra-articular fractures, with or without deviations,

their reducibility, and stability6. The A.O./OTA Group classification was created in 1986 and revised in 1990. It is

divided into extraarticular (type A), partial articular (type B) and complete articular

(type C). The three groups are organized in increasing order of severity concerning

morphological complexity, difficulty of treatment, and prognosis7.

The studies currently found in the literature present very different methodologies

and show low intra- and interobserver reproducibility in the different classifications

of fractures of the distal end of the radius, without consensus on which system should

be used in daily practice and the conduction of scientific studies4,8-10.

OBJECTIVE

The objective of this work is to evaluate the reproducibility of the three main classifications

and to define which one has the highest intra and interobserver agreement, and whether

the training stage of the participants influences the evaluation.

METHODS

This is an observational study, which includes imaging examinations of 14 patients

seen in the emergency department of a public health hospital, diagnosed with a fracture

of the distal end of the radius, from June to September 2017. All included patients

had radiographs in two views, anteroposterior and profile. Patients with immature

skeleton, those without satisfactory radiography, and those with previous wrist fractures

or deformities were excluded. For the assessment, 15 cases were presented to the evaluators,

with one patient being repeated on purpose, in order to improve intraobserver precision.

Twelve orthopedists in different stages of training were selected as participants,

eight members of the Brazilian Society of Orthopedics and Traumatology, two specialists

in hand surgery and six non-specialists; and four resident physicians, one in the

first year of training (R1), two in the second year (R2) and one in the third year

(R3). The evaluators classified the fractures presented after a brief explanation

of the classification systems and their consultation was allowed at any time during

the evaluation. After seven days, the participants classified the same fractures again.

The study met all requirements concerning the rights of human beings and was approved

by the institution’s Research Ethics Committee (substantiated opinion No. 2,294,348).

Statistical analysis

The weighted Kappa coefficient composed the inferential analysis for intra and interobserver

concordance of Frykman, Universal, and AO classifications. The Student’s t-test for

paired samples was applied to verify if there was a significant difference in the

degree of inter-observer concordance between the instruments. The interpretation of

the Kappa values was made following what was proposed by Landis and Koch, in 197711, according to which the Kappa values below zero represent deficient reproducibility,

from zero to 0.20 insignificant, from 0.21 to 0.40 slight reproducibility, 0.41 to

0.60 moderate reproducibility, 0.61 to 0.80 large reproducibility, and greater than

0.80 is considered a near-perfect match. The values obtained from the Kappa statistic

were tested at a significance level of 5%.

RESULTS

Among the classifications, a better reproducibility was observed in the Universal

classification, with a Kappa index of 0.72 considered as a great intraobserver reproducibility.

In the inter-observer evaluation, this index showed a slight decrease, ceasing to

have high reproducibility, changing to moderate with a value of 0.48. The Frykman

classification had a Kappa index of 0.51, and reproducibility is considered moderate

for intraobserver evaluations. In the inter-observer evaluation, the index was 0.36,

classified as mild. The A.O. had a slight intraobserver and interobserver reproducibility

(κ = 0.38 and 0.25, respectively) (Tables 1 and 2).

Table 1 - General interobserver concordance of Frykman, A.O., and Universal for fracture of

the distal end of the radius using the Kappa index.

| Moment 1 |

Moment 3 |

| Systema |

Kappa |

Concordance |

Kappa |

Concordance |

| Frykman |

0.36 |

Mild |

0.41 |

Moderate |

| Universal |

0.48 |

Moderate |

0.47 |

Moderate |

| A.O. |

0.25 |

Mild |

0.29 |

Mild |

Table 1 - General interobserver concordance of Frykman, A.O., and Universal for fracture of

the distal end of the radius using the Kappa index.

Table 2 - General Intraobserver Concordance of Frykman, A.O., and Universal for fracture of

the distal end of the radius using the Kappa index.

| System |

Kappa |

Concordance |

| Frykman |

0.51 |

Moderate |

| Universal |

0.72 |

Great |

| A.O. |

0.38 |

Mild |

Table 2 - General Intraobserver Concordance of Frykman, A.O., and Universal for fracture of

the distal end of the radius using the Kappa index.

When analyzing the classification of the repeated fracture, it was observed that only

one evaluator questioned that the same radiograph had been previously evaluated. However,

all the evaluators classified the lesion in the same way in at least one of the three

systems. The Frykman classification showed reproducibility equal to the Universal

classification, with seven correct answers, while that of group A.O. presented five

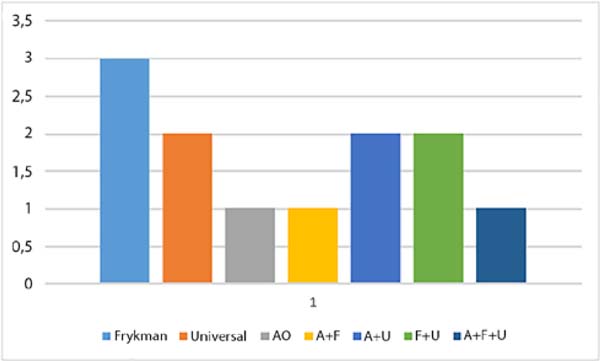

correct answers (Figure 1).

Figure 1 - General intraobserver concordance of Frykman’s classifications, A.O. and Universal

for fracture of the distal end of the radius.

Figure 1 - General intraobserver concordance of Frykman’s classifications, A.O. and Universal

for fracture of the distal end of the radius.

When analyzing the degree of education and experience of the evaluator, there was

no statistically significant variation about the values of the Kappa index (p < 0.05).

DISCUSSION

The ideal classification of any fracture should provide enough information to help

make appropriate treatment decisions, determine the prognosis, in addition to having

satisfactory reproducibility and being accessible to memorize12. The reproducibility of the system is based on inter- and intra-observer concordance,

and a useful classification must be reproducible so that it can be widely accepted

and allow different series to be compared4,8. In the present study, we analyzed the reproducibility of fractures of the distal

end of the radius, and a more significant inter and intraobserver concordance was

observed in the Universal classification, followed by Frykman and, finally, that of

A.O. In the various studies found in the literature, as well as in this one, most

used the inter-and intra-observer Kappa index to assess the concordance of the different

types of fracture classifications4,8,10.

Andersen et al., In 199613, studied four classifications for distal radius fractures: Frykman, Melone, Mayo,

and A.O. They found that none of them showed high interobserver concordance (Kappa

between 0.61 and 0.80). In Frykman’s classification, the intraobserver concordance

ranged from 0.40 to 0.60, and the interobserver had an average Kappa index of 0.36.

Regarding the A.O. complete, the mean intraobserver concordance ranged from 0.22 to

0.37, and, when reduced to three categories, a concordance level of 0.58 to 0.70 was

obtained. However, by reducing to three categories, the A.O. system has questionable

value compared to other classifications.

Assessing the reproducibility of the A.O. in 30 radiographs of distal radius fractures,

classified by 36 observers with different levels of experience, Kreder et al., in

199614, showed that the interobserver concordance was better for the simplified classification

(κ = 0.68) and progressively decreased when including the groups (κ = 0.48) and subgroups

(κ = 0.33) of this system. The Kappa index ranged from 0.25 to 0.42 for intraobserver

concordance with the A.O. system and from 0.40 to 0.86 in the simplified classification.

There was no difference regarding the degree of experience of observers in classifying

“groups” and “subgroups.”

Illarramendi et al., In 199815, used 200 radiographs classified by six observers with different levels of experience.

For the Frykman classification, moderate interobserver reproducibility (κ = 0.43)

and good intraobserver reproduction (κ = 0.61) were obtained. For the A.O.classification,

they found slight interobserver reproducibility (κ = 0.37) and moderate intraobserver

reproducibility (κ = 0.57). However, to obtain such results, the authors simplified

the Frykman and A.O. classifications, improving the reproducibility of both, which

perhaps would not occur if they were complete. There was greater intraobserver than

interobserver reproducibility, and concordance did not improve with increasing observer

experience.

There is still no consensus on the ideal methodology in the reproducibility studies

of the classifications, since the number of image examinations analyzed and the number

of evaluators influence the concordance of the answers 13-15. In the study by Kreder et al., In 199614, there were 30 images and 36 evaluators, while in the one presented by Illarramendi

et al., In 199815, six participants judged 200 images.

In the present study, we chose to reduce the number of fractures, totaling 15 with

two incidences each, so as not to make the process tiring, which could harm the results

of the evaluations. However, in concordance with the previous studies, from reproducibility,

we found that the classifications evaluated were not satisfactory, with a result considered

good only for intraobserver concordance at Universal. In the rest, the concordance

was mild to moderate13-15. Another point of concordance with the studies cited is the little influence of the

level of experience of the participants when classifying distal radius fractures,

since there was no significant difference between residents and specialists13,15.

Besides, unlike previous research, we purposely repeated a case for better assessment

of intraobserver concordance. It was observed that many evaluators were unable to

identify that they were classifying repeated radiographs, confirming the difficulty

in creating a highly reproducible classification system.

CONCLUSION

The highest intra and interobserver concordance was observed in the Universal classification,

followed by Frykman and, finally, that of the group A.O.; however, we found that the

reproducibility of the classifications was not satisfactory, with a result considered

good only for the intraobserver concordance in Universal. Furthermore, it was observed

that the reproducibility of the classification does not depend on the degree of experience

of the evaluator.

COLLABORATIONS

|

HM

|

Analysis and/or data interpretation, conception and design study, final manus c r

i p t a p p r o v a l , p r o j e c t administration, supervision, writing - original

draft preparation, writing - review & editing.

|

|

LDG

|

Analysis and/or data interpretation, data curation, formal analysis, methodology,

realization of operations and/or trials, writing - original draft preparation.

|

REFERENCES

1. Mallmin H, Ljunghall S. Incidente of Colles' fracture in Uppsala. A prospective study

of a quarter-million population. Acta Orthop Scand. 1992 Apr;63(2):213-5.

2. Pires PR. Fraturas do rádio distal. In: Traumatologia Ortopédica. Rio de Janeiro:

Revinter; 2004.

3. Bucholz RW, Heckman JD, Court-Brown CM, Tornetta P. Rockwood and Green's Fractures

in Adults. 7th ed. Philadelphia: Lippincott Williams & Wilkins; 2009.

4. Belloti JC, Tamaoki MJS, Santos JBG, Balbachevsky D, Chap EC, Albertoni WM, et al.

As classificações das fraturas do rádio distal são reprodutíveis? Concordância intra

e interobservadores. São Paulo Med J. 2008;126(3):180-5.

5. Frykman G. Fracture of the distal radius, including sequilla of shoulder-hand síndrome:

disturbance of the distal radio-ulnar joint and impairment of nerve function. A clinical

and experimental study. Acta Orthop Scand Suppl. 1973;108:1.

6. Cooney WP. Fractures of the distal radius: a modern treatment-based classification.

Orthop Clin North Am. 1993 Apr;24(2):211-6.

7. Hahn DM, Colton CL. Distal radius fractures. In: Rüedi TP, Murphy WM, eds. AO Principles

of fracture management. New York: Medical Publishers; 2001. p. 357-77.

8. Oskam J, Kingma J, Klasen HJ. Interrater reliability for the basic categories of the

AO/ASIF system as a frame of referece for classifying distal radial fractures. Percept

Mot Skills. 2001 Apr;92(2):589-94.

9. Reis FB, Faloppa F, Saone RP, Boni JR, Corvelo MC. Fraturas do terço distal do rádio:

classificação e tratamento. Rev Bras Ortop. 1994;29(5):326-30.

10. Oliveira Filho OM, Belangero WD, Teles JBM. Fraturas do rádio distal: avaliação das

classificações. Rev Assoc Med Bras. 2004;50(1):55-61.

11. Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics.

1977 Mar;33(1):159-74.

12. Belloti JC, Santos JBG, Erazo JP Iani LJ, Tamaoki MJS, Moraes VY, et al. Um novo método

de classificação para as fraturas da extremidade distal do rádio - a classificação

IDEAL. Rev Bras Ortop. 2013;48(1):36-40.

13. Andersen DJ, Blair WF, Steyers Junior CM, Adams BD, El-Khouri GY, Brandser EA. Classification

of distal radius fractures: an analysis of interobserver reliability and intraobserver

reproducibility. J Hand Surg Am. 1996 Jul;21(4):574-82.

14. Kreder HJ, Hanel DP, McKee M, Jupiter J, McGillivary G, Swiontkowski MF. Consistency

of AO fracture classification for the distal radius. J Bone Joint Surg Br. 1996 Sep;78(5):726-31.

15. Illarramendi A, Della Valle AG, Segal E, Carli P, Maignon G, Gallucci G. Evaluation

of simplified Frykman and AO classifications of fractures of the distal radius Assessment

of interobserver and intraobserver agreement. Int Orthop. 1998;22(2):111-5.

1. Hospital Sírio-Libanês, Brasília, DF, Brazil.

2. Universidade de Brasília, Brasília, DF, Brazil.

3. Hospital Regional do Paranoá, Brasília, DF, Brazil.

Corresponding author: Henrique Mansur, Área Militar do Aeroporto Internacional de Brasília, Lago Sul, Brasília, DF, Brazil.

Zip Code: 71607-900. E-mail: henrimansur@globo.com

Article received: September 20, 2019.

Article accepted: February 22, 2020.

Conflicts of interest: none.